Today I’d like to talk about one of the biggest improvement that we’re bringing to Netwisp’s services – tiered storage featuring solid state drives (SSD). This is an exciting change because storage speed has always been one of our biggest bottlenecks for web hosting services. When we started back in 2004, 80GB hard drives was the standard being used for hosting. Today for the same price you can get a hard drive that holds 8000GB. However, while you can store 100 times more data on a single drive, its only about twice as fast. What this means is that rather than taking a few hours to replace a failed hard drive, it can take days. It also handles the same number of IO requests as the 80GB hard drive (about 125 per second). So while drives today are able to hold much more data,

A few years ago, solid state drives started to finally change this equation. They are memory based so they can handle up to tens of thousands of IO requests a second, which is a huge improvement over mechanical drives. At first they weren’t that reliable, but over the past couple of years they’ve finally matured to the point where we can start adding them into our services. The two most popular methods hosting companies have used for integrating SSD has been either as a caching system, or more recently, switching to entirely using SSD drives. I’ll briefly discuss what these two methods are.

SSD As A Caching Layer

This has been a popular solution for companies looking to use SSD to improve the performance of their storage. How it works is that data that is often requested will get duplicated to the SSD drives so that it can accessed from there rather than the slower mechanical drives. There are many solutions out there for using the SSD as a cache. Facebook for example has developed FlashCache for using SSD to help cache their website content. While these solutions do work well, there are a couple drawbacks. The first is that the SSD drives can’t be used for storage. If the SSD drives are 500GB in size, that is 500GB that we can’t use for storing people’s data.

The second drawback is that a lot of unnecessary IO can be created which defeats the purpose of using SSD drives as a cache. The first problem is that the system will eventually fill up the drive with cached data. This becomes an issue like with the 500GB drives above, you’ll add the overhead of 500GB of data being copied to the SSD, even if the data may not need to be cached. This ends up having a performance hit. Also, any time cached data changes, you end up with two writes. The first goes to the SSD drives, and the second goes (eventually) to the mechanical drives. Since all writes have to still go to the slower drives, they may still be too slow to keep up with the traffic.

Pure SSD Storage

This has been the other route many providers offer, and while it offers the highest performance, there is a drawback with this. Its very expensive. A 2TB enterprise class SSD still costs over $1000 each. For the cost of one single SSD drive, we could instead get over 40TB of mechanical storage (20 2TB SATA drives). Having a SSD only setup limits how much storage we can offer and I’ll show why tiered storage makes better sense than a pure SSD setup.

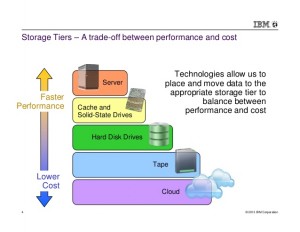

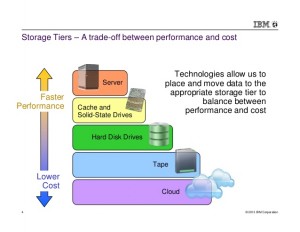

Tiered Storage

Tiered storage is where the system migrates your data based on how often its being accessed.

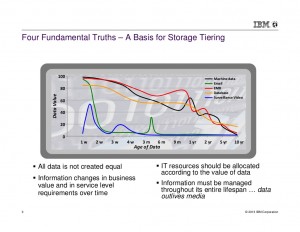

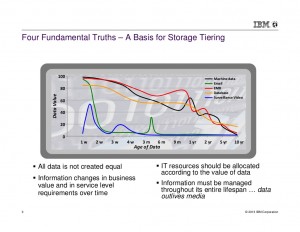

The way it works is pretty simple. If you have data that is being often used, it will get moved to faster storage. If its being used less often, it will be moved to slower storage. The important thing to realize is that during transition times (like lets say you have a blog page that suddenly became popular), the system’s memory will also work as a tier because your website will get cached in memory. The reason why tiered storage makes much better sense than a pure SSD or cached setup is that most data isn’t used that often. Here’s another IBM chart showing how data is usually used less often the longer it remains on the system.

If you think about it, websites operate the same way. Just like FaceBook’s – posts from today are the ones that are being hit. Those needs to be stored on SSD. That picture you posted a year ago? Its only viewed once or twice a week and it doesn’t need to be on SSD storage anymore. The same goes for wordpress blog posts. The posts you wrote three years ago are likely not being seen as often as the ones on your homepage. Or you made a backup of your account or uploaded a video to share with your friends, that doesn’t need to be on SSD storage either.

We also observe this behavior on our servers. Here is the usage breakdown of one of our tiered storage servers that currently has 750GB in use:

TIER DEVICE SIZE MB ALLOCATED MB AVERAGE READS AVERAGE WRITES TOTAL_READS TOTAL_WRITES

0 sdb1 121614 36783 5522 16105 671567378 1958662399

1 sda4 1109216 948961 1621 634 1798949382 703693842

As you can see, only 36GB of the data is being used often enough to make it worthwhile to have on tier 0 (SSD). The other 710GB has just 10% of the IO requests that the hot data has. It doesn’t need to be on SSD. This is why a pure SSD setup doesn’t make sense. We can instead offer more affordable storage this way than with a pure SSD storage setup.

The other thing is that going to a tiered storage setup opens many doors for us. Right now, we’re only doing two tiers. A tier of SSD and a tier of SAS/SATA drives. But we’re also starting to use network storage for our backups. As we develop this system, we can extend our tiers to include network storage that will allow us to add increasing amounts of storage without any major drop in our quality of service.

This is why we’re excited with this feature being added to all of our servers and why we think it will separate Netwisp from the other providers out there.